Data Compression:Storage Space,Coding Requirements and Basic Compression Techniques.

Data Compression

In comparison to the text medium, video frames have high storage requirements. Audio and particularly video pose even greater demands in this regard. The data rates needed to process and send continuous media are also considerable. This chapter thus covers the efficient compression of audio and video .

7.1 Storage Space

Uncompressed graphics, audio, and video data require substantial storage capacity, which is not possible in the case of uncompressed video data, even given today’s CD and DVD technology. The same is true for multimedia communications. Data transfer of uncompressed video data over digital networks requires that very high bandwidth be provided for a single point-to-point communication. To be cost-effective and feasible, multimedia systems must use compressed video and audio streams.

Most compression methods address the same problems, one at a time or in combi- nation. Most are already available as products. Others are currently under development or are only partially completed. While fractal image compression [BH93] may be important in the future, the most important compression techniques in use today are JPEG for single pictures, H.263 for video, MPEG for video and audio, as well as proprietary techniques such as QuickTime from Apple and Video for Windows from Microsoft.

7.2Coding Requirements

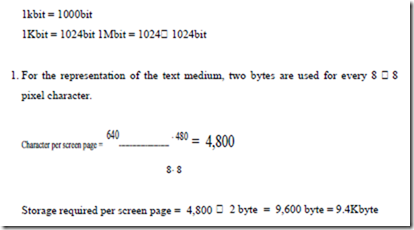

Images have considerably higher storage requirements than text, and audio and video have still more demanding properties for data storage. Moreover, transmitting continuous media also requires substantial communication data rates. The figures cited below clarify the qualitative transition from simple text to full-motion video data and demonstrate the need for compression. In order to be able to compare the different data storage and bandwidth requirements of various visual media (text, graphics, images, and video), the following specifications are based on a small window of 640 480 pixels on a display. The following holds always:

2. For the representation of vector images, we assume that a typical image consists of 500 lines . Each line is defined by its coordinates in the x direction and the y direction, and by an 8-bit attribute field. Coordinates in the x direction require 10 bits (log2 (640)), while coordinates in the y direction require 9 bits (log2 (480)). Bits per line = 9bits + 10bits + 9bits + 10bits + 8bits = 46bits

3. Individual pixels of a bitmap can be coded using 256 different colors, requiring a single byte per pixel.

Storage required per screen page = 640 ×480 ×1byte = 307,200 byte = 300Kbyte

The next examples specify continuous media and derive the storage required for one second of playback.

1. Uncompressed speech of telephone quality is sampled at 8kHz and quantized using 8bit per sample, yielding a data stream of 64Kbit/s.

3. A video sequence consists of 25 full frames per second. The luminance and chrominance of each pixel are coded using a total of 3 bytes.

The throughput in such a system can be as high as 140Mbit/s, which must also be transmitted over networks connecting systems (per uni-directional connection). This kind of data transfer rate is not realizable with today’s technology, or in the near future with reasonably priced hardware.

However, these rates can be considerably reduced using suitable compression techniques, and research, development, and standardization in this area have progressed rapidly in recent years. These techniques are thus an essential component of multimedia systems.

Several compression techniques for different media are often mentioned in the literature and in product descriptions:

· JPEG (Joint Photographic Experts Group) is intended for still images.

· H.263 (H.261 p64) addresses low-resolution video sequences. This can be com-plemented with audio coding techniques developed for ISDN and mobile communications, which have also been standardized within CCITT.

• MPEG (Moving Picture Experts Group) is used for video and audio compression.

Compression techniques used in multimedia systems are subject to heavy demands. The quality of the compressed, and subsequently decompressed, data should be as good as possible. To make a cost-effective implementation possible, the complexity of the technique used should be minimal. The processing time required for the decompression algorithms, and sometimes also the compression algorithms, must not exceed certain time spans. Different techniques address requirements differently

Major Steps of Data Compression

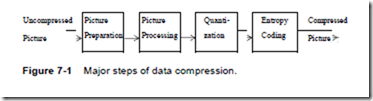

Figure 7-1 shows the typical sequence of operations performed in the compression of still images and video and audio data streams. The following example describes the compression of one image:

1. The preparation step (here picture preparation) generates an appropriate digital representation of the information in the medium being compressed. For example, a picture might be divided into blocks of 8 8 pixels with a fixed number of bits per pixel.

2. The processing step (here picture processing) is the first step that makes use of the various compression algorithms. For example, a transformation from the time domain to the frequency domain can be performed using the Discrete Cosine Transform (DCT). In the case of interframe coding, motion vectors can be determined here for each 88 pixel block.

3. Quantization takes place after the mathematically exact picture processing step. Values determined in the previous step cannot and should not be processed with full exactness; instead they are quantized according to a specific resolution and characteristic curve. This can also be considered equivalent to the -law and A-law, which are used for audio data [JN84]. In the transformed domain, the results can be treated differently depending on their importance (e.g., quantized with different numbers of bits).

4. Entropy coding starts with a sequential data stream of individual bits and bytes. Different techniques can be used here to perform a final, lossless compression. For example, frequently occurring long sequences of zeroes can be compressed by specifying the number of occurrences followed by the zero itself.

Picture processing and quantization can be repeated iteratively, such as in the case of Adaptive Differential Pulse Code Modulation (ADPCM). There can either be “feed- back” (as occurs during delta modulation), or multiple techniques can be applied to the data one after the other (like interframe and intraframe coding in the case of MPEG). After these four compression steps, the digital data are placed in a data stream having a defined format, which may also integrate the image starting point and type of compression. An error correction code can also be added at this point.

Figure 7-1 shows the compression process applied to a still image; the same principles can also be applied to video and audio data.

Decompression is the inverse process of compression. Specific coders and decoders can be implemented very differently. Symmetric coding is characterized by comparable costs for encoding and decoding, which is especially desirable for dialogue applications. In an asymmetric technique, the decoding process is considerably less costly than the coding process. This is intended for applications where compression is performed once and decompression takes place very frequently, or if the decompression must take place very quickly. For example, an audio-visual course module is produced once, but subsequently decoded by the many students who use it. The main requirement is real-time decompression. An asymmetric technique can be used to increase the quality of the compressed images.

The following section discusses some basic compression techniques. Subsequent sections describe hybrid techniques frequently used in the multimedia field

7.4 Basic Compression Techniques

The hybrid compression techniques often used on audio and video data in multi- media systems are themselves composed of several different techniques. For example,

7.4.1 Run-Length Coding

Data often contains sequences of identical bytes. By replacing these repeated byte

sequences with the number of occurrences, a substantial reduction of data can be achieved. This is known as run-length coding. A special marker M is needed in the data that does not occur as part of the data stream itself. This M-byte can also be realized if all 256 possible bytes can occur in the data stream by using byte stuffing. To illustrate this, we define the exclamation mark to be the M-byte. A single occurrence of an excla-mation mark is interpreted as the M-byte during decompression. Two consecutive exclamation marks are interpreted as an exclamation mark occurring within the data.

The M-byte can thus be used to mark the beginning of a run-length coding. The technique can be described precisely as follows: if a byte occurs at least four times in a row, then the number of occurrences is counted. The compressed data contain the byte, followed by the M-byte and the number of occurrences of the byte. Remembering that we are compressing at least four consecutive bytes, the number of occurrences can be offset by –4. This allows the compression of between four and 258 bytes into only three bytes. (Depending on the algorithm, one or more bytes can be used to indicate the length; the same convention must be used during coding and decoding.)

In the following example, the character C occurs eight times in a row and is com- pressed to the three characters C!4:

Uncompressed data: ABCCCCCCCCDEFGGG

Run-length coded: ABC!4DEFGGG

7.4.2 Zero Suppression

Run-length coding is a generalization of zero suppression, which assumes that just one symbol appears particularly often in sequences. The blank (space) character in text is such a symbol; single blanks or pairs of blanks are ignored. Starting with sequences of three bytes, they are replaced by an M-byte and a byte specifying the number of blanks in the sequence. The number of occurrences can again be offset (by –3). Sequences of between three and 257 bytes can thus be reduced to two bytes. Further variations are tabulators used to replace a specific number of null bytes and the defini- tion of different M-bytes to specify different numbers of null bytes. For example, an M5-byte could replace 16 null bytes, while an M4-byte could replace 8 null bytes. An M5-byte followed by an M4-byte would then represent 24 null bytes.

7.4.3 Vector Quantization

In the case of vector quantization, a data stream is divided into blocks of n bytes each (n>1). A predefined table contains a set of patterns. For each block, the table is consulted to find the most similar pattern (according to a fixed criterion). Each pattern in the table is associated with an index. Thus, each block can be assigned an index. Such a table can also be multidimensional, in which case the index will be a vector. The corresponding decoder has the same table and uses the vector to generate an approxi-mation of the original data stream. For further details see [Gra84] for example.

7.4.4 Pattern Substitution

A technique that can be used for text compression substitutes single bytes for pat- terns that occur frequently. This pattern substitution can be used to code, for example, the terminal symbols of high-level languages (begin, end, if). By using an M-byte, a larger number of words can be encoded–the M-byte indicates that the next byte is an index representing one of 256 words. The same technique can be applied to still images, video, and audio. In these media, it is not easy to identify small sets of frequently occur-ring patterns. It is thus better to perform an approximation that looks for the most simi-lar (instead of the same) pattern. This is the above described vector

quantization.

7.4.7 Huffman Coding

Given the characters that must be encoded, together with their probabilities of occurrence, the Huffman coding algorithm determines the optimal coding using the minimum number of bits [Huf52]. Hence, the length (number of bits) of the coded char-acters will differ. The most frequently occurring characters are assigned to the shortest code words. A Huffman code can be determined by successively constructing a binary tree, whereby the leaves represent the characters that are to be encoded. Every node contains the relative probability of occurrence of the characters belonging to the subtree beneath the node. The edges are labeled with the bits 0 and 1.

The following brief example illustrates this process:

1. The letters A, B, C, D, and E are to be encoded and have relative probabilities of occurrence as follows:

p(A)=0.16, p(B)=0.51, p(C)=0.09, p(D)=0.13, p(E)=0.11

2. The two characters with the lowest probabilities, C and E, are combined in the first binary tree, which has the characters as leaves. The combined probability of their root node CE is 0.20. The edge from node CE to C is assigned a 1 and the edge from CE to C is assigned a 0. This assignment is arbitrary; thus, different Huffman codes can result from the same data.

3. Nodes with the following relative probabilities remain:

p(A)=0.16, p(B)=0.51, p(CE)=0.20, p(D)=0.13

The two nodes with the lowest probabilities are D and A. These nodes are com- bined to form the leaves of a new binary tree. The combined probability of the root node AD is 0.29. The edge from AD to A is assigned a 1 and the edge from AD to D is assigned a 0.

If root nodes of different trees have the same probability, then trees having the shortest maximal path between their root and their nodes should be combined first. This keeps the length of the code words roughly constant.

4. Nodes with the following relative probabilities

remain: p(AD)=0.29, p(B)=0.51, p(CE)=0.20

The two nodes with the lowest probabilities are AD and CE. These are combined into a binary tree. The combined probability of their root node ADCE is 0.49. The edge from ADCE to AD is assigned a 0 and the edge from ADCE to CE is assigned a 1.

5. Two nodes remain with the following relative probabilities: p(ADCE)=0.49, p(B)=0.51

hese are combined to a final binary tree with the root node ADCEB. The edge from

ADCEB to B is assigned a 1, and the edge from ADCEB to ADCE is assigned

a 0.

6. Figure 7-2 shows the resulting Huffman code as a binary tree. The result is the following code words, which are stored in a table:

Such a table could be generated for a single image or for multiple images together. In the case of motion pictures, a Huffman table can be generated for each sequence or for a set of sequences. The same table must be available for both encoding and decoding. If the information of an image can be transformed into a bit stream, then a

Huffman table can be used to compress the data without any loss. The simplest way to generate such a bit stream is to code the pixels individually and read them line by line. Note that usually more sophisticated methods are applied, as described in the remainder of this chapter.

If one considers run-length coding and all the other methods described so far, which produce the same consecutive symbols (bytes) quite often, it is certainly a major objective to transform images and videos into a bit stream. However, these techniques also have disadvantages in that they do not perform efficiently, as will be explained in the next step.

2. Concept of IP Telephony

IP telephony means transmission of voice over IP networks. The basic steps for transmitting voice over IP network are listed below and illustrated in Figure 2-1:[1]

1. Audio from microphone or line input is A/D converted at audio input device.

2. The samples are copied into memory buffer in blocks of frame length.

3. The IP telephony application estimates the energy levels of the block of samples.

4. Silence detector decides whether the block is to be treated as silence or as part of a talkspurt.

5. If the block is a talkspurt it is coded with the selected algorithm (e.g., GSM ).

6. Some header information is added to the block.

7. The block with headers is written into socket interface (UDP).

8. The packet is transferred over a physical network and received by the peer.

9. The header information is removed, block of audio is decoded using the same algorithm it was encoded, and samples are written into a buffer.

10. The block of samples is copied from the buffer to the audio output device.

11. The audio output device D/A converts the samples and outputs them.

Advantages of IP Telephony

In relatively short period of time, IP telephony is expected to revolutionize the telecommunications industry. Advantages of IP telephony include lower cost long distance and reduced access charges, more efficient backbones and compelling new services. The benefits of shifting traditional voice onto packet networks can be reaped by businesses, Internet Service Providers (ISP), traditional carriers etc. Business benefits from IP telephony because it takes advantages of existing data networks, reducing operating costs by managing only one network and enables them to enjoy almost-toll quality voice. In a recent survey, conducted by Forester Research of 52 Fortune 1000 Firms, more than 40% of telecom managers plan to move some voice/fax traffic to IP network by 1999. For consumers IP telephony started from inexpensive or even free Internet calls. Although IP telephony calls lack certain quality when compared to calls in PSTNs people are willing to sacrifice it for much less costs. Many households have only one telephone line which is often used for Web browsing. Therefore they would like to use IP telephony as a way of accepting incoming calls by rerouting them to the PC.

ISPs have also become increasingly focused on IP telephony because it enables them to offer new services beyond Internet access (voice/fax), improve their network utilization, and offer voice services at significantly lower rates. It is forecasted that the Internet will carry 11% of U.S. & International long distance traffic and 10% of the world's fax traffic by 2002.[9] In fact, UUNET, PSINet, IDT and America Online have already announced IP telephony and fax plans for 1999.

Session Initiation Protocol

SIP is one of the most important VoIP signaling protocols operating in the application layer . SIP can perform both unicast and multicast sessions and supports user mobility. SIP handles signals and idenitifies user location , call setup, call termination and busy signals. SIP can use multicast to support conference calls and use the Session Description Protocol(SDP) to negotiate parameters.

SIP Components

Although SIP works in conjunction with other technologies and protocols, there are two fundamental components that are used by the Session Initiation Protocol:

■User agents, which are endpoints of a call (i.e., each of the participants in

a call)

■ SIP servers, which are computers on the network that service requests from clients, and send back responses

User Agents

User agents are both the computer that is being used to make a call, and the target computer that is being called. These make the two endpoints of the communication session. There are two components to a user agent: a client and a server. When a user agent makes a request (such as initiating a session),it is the User Agent Client (UAC), and the user agent responding to the request is the User Agent Server (UAS). Because the user agent will send a message, and then respond to another, it will switch back and forth between these roles throughout a session.

Even though other devices that we’ll discuss are optional to various degrees, User Agents must exist for a SIP session to be established. Without them, it would be like trying to make a phone call without having another person to call. One UA will invite the other into a session, and SIP can then be used to manage and tear down the session when it is complete. During this time, the UAC will use SIP to send requests to the UAS, which will acknowledge the request and respond to it. Just as a conversation between

two people on the phone consists of conveying a message or asking a question and then waiting for a response, the UAC and UAS will exchange messages and swap roles in a similar manner throughout the session. Without this interaction, communication couldn’t exist.

Although a user agent is often a software application installed on a computer, it can also be a PDA, USB phone that connects to a computer, or a gateway that connects the network to the Public Switched Telephone

Network. In any of these situations however, the user agent will continue to act as both a client and a server, as it sends and responds to messages.

SIP Server

The SIP server is used to resolve usernames to IP addresses, so that requests sent from one user agent to another can be directed properly.A user agent registers with the SIP server, providing it with their username and current IP address, thereby establishing their current location on the network.This also

verifies that they are online, so that other user agents can see whether they’re

available and invite them into a session. Because the user agent probably wouldn’t know the IP address of another user agent, a request is made to the SIP server to invite another user into a session.The SIP server then identifies whether the person is currently online, and if so, compares the username to their IP address to determine their location. If the user isn’t part of that domain, and thereby uses a different SIP server, it will also pass on requests to other servers.

In performing these various tasks of serving client requests, the SIP server will act in any of several different roles:

■ Registrar server

■ Proxy server

■ Redirect server

Registrar servers are used to register the location of a user agent who has

logged onto the network. It obtains the IP address of the user and associates it with their username on the system.This creates a directory of all those who are currently logged onto the network, and where they are located. When someone wishes to establish a session with one of these users, the Registrar server’s information is referred to, thereby identifying the IP addresses of those involved in the session. Proxy Server

Proxy servers are computers that are used to forward requests on behalf of

other computers. If a SIP server receives a request from a client, it can forward the request onto another SIP server on the network. While functioning as a proxy server, the SIP server can provide such functions as network access control, security, authentication, and authorization.

Redirect Server

The Redirect servers are used by SIP to redirect clients to the user agent they are attempting to contact. If a user agent makes a request, the Redirect server can respond with the IP address of the user agent being contacted.This is different

from a Proxy server, which forwards the request on your behalf, as the Redirect server essentially tells you to contact them yourself.The Redirect server also has the ability to “fork” a call, by splitting the call to several locations. If a call was made to a particular user, it could be split to a number of different locations, so that it rang at all of them at the same time.The first of these locations to answer the call would receive it, and the other locations would stop ringing.

SIP Requests and Responses

Because SIP is a text-based protocol like HTTP, it is used to send information between clients and servers, and User Agent clients and User Agent servers, as a series of requests and responses. When requests are made, there are a number of possible signaling commands that might be used:

REGISTER Used when a user agent first goes online and registers their SIP address and IP address with a Registrar server.

■ INVITE Used to invite another User agent to communicate, and then establish a SIP session between them.

■ ACK Used to accept a session and confirm reliable message exchanges.

■OPTIONS Used to obtain information on the capabilities of another user agent, so that a session can be established between them.

When this information is provided a session isn’t automatically created as a result.

■ SUBSCRIBE Used to request updated presence information on another user agent’s status.This is used to acquire updated information on whether a User agent is online, busy, offline, and so on.

■NOTIFY Used to send updated information on a User agent’s current status.This sends presence information on whether a User agent is online, busy, offline, and so on.

■ CANCEL Used to cancel a pending request without terminating the session.

■ BYE Used to terminate the session. Either the user agent who initiated the session, or the one being called can use the BYE command at any time to terminate the session.

H.323 Protocol

The H.323-series protocols are implemented in layer 5 of the TCP/IP model and run over either TCP or UDP. The H.323 series supports simultaneous voice and data transmission and can transmit binary messages that are encoded using basic encoding rules. H.323 provides a unique framework for security ,user authentication, and authorization and supports conference calls and multipoint connections , as well as accounting and call-forwarding services.

H.323 components

1. Multimedia terminal: a multimedia terminal is designed to support video and data traffic and to provide support for IP telephony.

2. DNS Server: As in SIP, a DNS server maps a domain name to an IP address.

3. Gateway: the gateway is a router that serves as an interface between the IP telephone system and the traditional telephone network.

4. Gate keeper: the gatekeeper is the control center that performs all the location and signaling function. The gatekeeper monitors and coordinates the activities of the gateway. The gateway also performs some signaling functions.

5. Multicast or multipoint control uint: this unit provides some multipoint services such as conference calls

When user1 communicates with its DNS server, the signaling use both TCP and UDP, and partitioned into the following five steps

1. Call setup

2. Initial communication capability

3. Audio/video communication establishment

4. Communications

5. Call termination

When user1 dials user2’s telephone number , the first set of signals are exchanged between these two users in conjunction with opening a TCP connection.

TCP-SYN,TCP-SYN-ACK and then TCP-ACK signals are generated between the two users. At this point, theH225.0 SETUP ON TCP signal

informs the called party that the connection can be set up on TCP. The users can now request a certain bandwidth from the associated gatekeeper server to register with.

At step2, all the end points communication capabilities available over TCP are exchanged. Step3 implements the establishment of a logical channel , which in H.323 is unidirectional. At the end of this phase, two end points are set for communications.

Step 4 comprises the communication between two users. This phase is handled using RTP over UDP. At this step any kind of media flow can be considered, depending on the size and type of the channel established in step4. Finally, at step 5, the call is terminated by either user. , user2 initaites the termination of the call by closing the logical channel in H.245 and disconnecting the call from the gatekeeper.

RTP(Real-Time Transport Protocol)

RTP, which provides end-to-end delivery services for data with real-time characteristics, such as interactive audio and video. Those services include payload type identication, sequence numbering, time stamping and delivery monitoring. Applications typically run RTP on top of UDP to make use of its multiplexing and check-sum services; both protocols contribute parts of the transport protocol functionality. However, RTP may be used with other suitable underlying network or transport protocols . RTP supports data transfer to multiple destinations using multicast distribution if provided by the underlying network.

Note that RTP itself does not provide any mechanism to ensure timely delivery or provide other quality-of-service guarantees, but relies on lower-layer services to do so. It does not guarantee delivery or prevent out-of-order delivery, nor does it assume that the underlying network is reliable and delivers packets in sequence. The sequence numbers included in RTP allow the receiver to reconstruct the sender's packet sequence, but sequence numbers might also be used to determine the proper location of a packet, for example in video decoding, without necessarily decoding packets in sequence.

RTP, consisting of two closely-linked parts:

the real-time transport protocol (RTP), to carry data that has real-time properties. the RTP control protocol (RTCP), to monitor the quality of service and to convey

information about the participants in an on-going session. The latter aspect of RTCP

may be sufficient for \loosely controlled" sessions, i.e., where there is no explicit membership control and set-up, but it is not necessarily intended to support all of an application's control communication requirements. This functionality may be fully or partially subsumed by a separate session control protocol, which is beyond the scope of this document.

If both audio and video media are used in a conference, they are transmitted as separate RTP sessions. That is, separate RTP and RTCP packets are transmitted for each medium using two different UDP port pairs and/or multicast addresses. There is no direct coupling at the RTP level between the audio and video sessions, except that a user participating in both sessions should use the same distinguished (canonical) name in the RTCP packets for both so that the sessions can be associated. One motivation for this separation is to allow some participants in the conference to receive only one medium if they choose.

SCTP

TCP Issues

TCP supports the most popular suite of applications on the Internet today, and it has been enhanced in recent years to improve robustness and performance over networks of varying capacities and quality. Nevertheless, it largely retains the behavior outlined in 1981 by Internet pioneer Jon Postel in RFC 793,4 including properties that make it a less-than-ideal trans transaction-based processing. TCP requires a strict order-of- transmission delivery service for all data passed between two hosts. This is too confining for applications that can accept per-stream sequential delivery (partial ordering) or no sequential delivery (order-ofarrival delivery).

TCP also treats each data transmission as an unstructured sequence of bytes. It forces applications that process individual messages to insert and track message boundaries within the TCP byte stream. Applications may also need to invoke the TCP push mechanism to ensure timely data transport.

The TCP sockets-based application-programming interface does not support multihoming. An application can only bind a single IP address to a particular TCP connection with another host. If the interface associated with that IP address goes

down, the TCP connection is lost and must be reestablished.

Finally, TCP hosts are susceptible to denial-ofservice attacks characterized by TCP SYN “storms” in which a burst of TCP SYN packets arrives to signal an unsuspecting host that the sender wishes to establish a TCP connection with it. The receiving host allocates memory and responds with SYN ACK messages. When the attacker never returns ACK messages to complete the three-way TCP connection setup handshake, the victimized host is left with depleted resources and an inability to service legitimate TCP connection setup requests.

SCTP Features

. To eliminate the traditional connotation that a “connection” is between a single source and destination address, SCTP uses the term association to define the protocol state installed on two peer SCTP hosts exchanging messages. An SCTP association can employ multiple addresses at each end. SCTP supports some features inherited from TCP and others that provide additional functionality:

Message boundary reservation: SCTP reserves applications’ message-framing boundaries by placing messages inside one or more SCTP data structures, called chunks. Multiple messages can be bundled into a single chunk, or a large message can be spread across multiple chunks.

■ No “head-of-line” blocking. : SCTP eliminates the head-of-line blocking delay that can occur when a TCP receiver is forced to resequence packets that arrive out of order because of network reordering or packet loss.

■ Multiple delivery modes. : SCTP supports several modes of delivery including strict order-oftransmission (like TCP), partially ordered (per stream), and unordered delivery (like UDP).

■ Multihoming support. : SCTP sends packets to one destination IP address, but can reroute messages to an alternate if the current IP address becomes unreachable.

■ TCP-friendly congestion control : SCTP employs the standard techniques pioneered in TCP for congestion control,6 including slow-start, congestion avoidance, and fast retransmit. SCTP applications can thus receive their share of network resources when coexisting with TCP applications.

■ Selective acknowledgments.: SCTP employs a selective acknowledgment scheme, derived from TCP, for packet loss recovery.7 The SCTP receiver provides feedback to the sender about which messages to retransmit when any are lost.

■ User data fragmentation:. SCTP will fragment messages to conform to the maximum transmit unit (MTU) size along a particular routed path between communicating hosts. This function is described in RFC 1191 and is optionally employed by TCP/IP to avoid the performance degradation that results when IP routers have to perform fragmentation.8

■ Heartbeat keep-alive mechanism: SCTP sends heartbeat control packets to idle destination addresses that are part of the association. The protocol declares the IP address to be down once it reaches the threshold of unreturned heartbeat acknowledgments.

■ DOS protection: To mitigate the impact of TCP SYN flooding attacks on a target host, SCTP employs a security “cookie” mechanism during

association initialization.

SCTP Data Transfer

The SCTP message structure facilitates packaging bundled control and data essages in a single format.

Figure 3 shows the format of an SCTP packet: A common header is followed by one or more variable- length chunks, which use a type-length-value (TLV) format. Different chunk types are used to carry control or data

information inside an SCTP packet. The SCTP common header contains source and destination port addresses that are used with the source and destination IP addresses to identify the recipient of the SCTP packet.

Checksum value to assure data integrity while the packet transits an IP network; and Verification tag that holds the value of the initiation tag first exchanged during the handshake.

Any SCTP packet in an association that does not include this tag will be dropped on arrival. The verification tag protects against old, stale packets arriving from a previous association, as well as various “man-in-the-middle” attacks, and it obviates the need for TCP’s timed-wait state, which consumes resources and limits the number of total connections a host can accommodate.

Every chunk type includes TLV header information that contains the chunk type, delivery processing flags, and a length field. In addition, a DATA chunk will precede user payload information with the transport sequence number (TSN), stream identifier, stream sequence number (SSN), and payload protocol identifier. The TSN and SSN provide two separate sequence numbers on every DATA chunk. The TSN is used for per-association reliability and the SSN is for perstream ordering. The stream identifier marks individual messages within the same stream.

Figure 4 shows an example of a normal data exchange between two SCTP hosts. An SCTP host sends selective acknowledgments (SACK chunks) in response to every other SCTP packet carrying DATA chunks. The SACK fully describes the receiver’s state, so that the sender can make retransmission decisions based on what has been received. SCTP supports fast retransmit and time-out retransmission algorithms similar to those in TCP.

With few exceptions, most chunk types can be bundled together in one SCTP packet. (SACKs often get bundled during two-way exchanges of user data.) One bundling restriction is that control chunks must be placed ahead of any DATA chunks in the packet.

SCTP Shutdown

A connection-oriented transport protocol needs a graceful method for shutting down an association.SCTP uses a three-way handshake with one difference from the one used in TCP: A TCP end point can engage the shutdown procedure while keeping the connection open and receiving new data from the peer. SCTP does not support this “half closed”

state, which means that both sides are prohibited from sending new data by their upper layer once a graceful shutdown sequence is initiated Figure 5 depicts a typical graceful shutdown sequence in SCTP. In this example, the application in host A wishes to shut down and terminate the association with host Z. SCTP enters the SHUTDOWN_PENDING state in which it will accept no data from the application but will still send new data that is queued for transmission to host Z. After acknowledging all queued data, host A sends a SHUTDOWN chunk and enters the SHUTDOWN_SENT state.

Upon receiving the SHUTDOWN chunk, host Z notifies its upper layer, stops accepting new data from it, and enters the SHUTDOWN_RECEIVED state. Z transmits any remaining data to A, which follows with subsequent SHUTDOWN chunks that inform Z of the data’s arrival and reaffirm that the association is shutting down. Once it acknowledges all queued data on host Z,

host A sends a subsequent SHUTDOWN-ACK chunk, followed by a SHUTDOWN- COMPLETE chunk that completes the association shutdown

Comments

Post a Comment