QoS & Resource Allocation, VPN, MPLS, Overlay Networks part1

Overview of QoS

· Quality of service (QoS) refers to resource reservation control mechanisms rather than the achieved service quality.

· Quality of service is the ability to provide different priority to different applications, users, or data flows, or to guarantee a certain level of performance to a data flow.

· For example, a required bit rate, delay, jitter, packet dropping probability and/or bit error rate may be guaranteed.

· Quality of service guarantees are important if the network capacity is insufficient, especially for real-time streaming multimedia applications such as voice over IP, online games and IP-TV, since these often require fixed bit rate and are delay sensitive, and in networks where the capacity is a limited resource, for example in cellular data communication.

· In the absence of network congestion, QoS mechanisms are not required.

· A network or protocol that supports QoS may agree on a traffic contract with the application software and reserve capacity in the network nodes, for example during a session establishment phase.

· During the session it may monitor the achieved level of performance, for example the data rate and delay, and dynamically control scheduling priorities in the network nodes. It may release the reserved capacity during a tear down phase.

Approaches which provide quality support are divided into

· Integrated services

· Differentiated services

Integrated Services Q0S

· IntServ or integrated services is an architecture that specifies the elements to guarantee quality of service (QoS) on networks.

· IntServ specifies a fine-grained QoS system, which is often contrasted with DiffServ's coarse-grained control system.

· The idea of IntServ is that every router in the system implements IntServ, and every application that requires some kind of guarantees has to make an individual reservation.

· Integrated services approach consists of two service classes

1. Guaranteed service class: - defined for applications that cannot tolerate a delay beyond particular value. Real time applications like voice or video communications use this type of service

2. Controlled-load service class:- defined for applications that can tolerate some delay and loss.

The below figure shows four processes providing quality of service

1. Traffic shaping :-It regulates the traffic

2. Admission control: - It governs the network by admitting or rejecting the application flow.

3. Resource allocation:- It allows the network users to reserve the resources on neighboring routers

4. Packet scheduling: - sets the timetable for the transmission of packet flows.

1. Traffic shaping

· Traffic shaping provides a means to control the volume of traffic being sent into a network in a specified period or the maximum rate at which the traffic is sent (rate limiting.

· This control can be accomplished in many ways and for many reasons; however traffic shaping is always achieved by delaying packets.

· Traffic shaping is commonly applied at the network edges to control traffic entering the network, but can also be applied by the traffic source (for example, computer or network card) or by an element in the network.

· A traffic shaper works by delaying metered traffic such that each packet complies with the relevant traffic contract.

· Metering may be implemented with for example the leaky bucket or token bucket algorithms

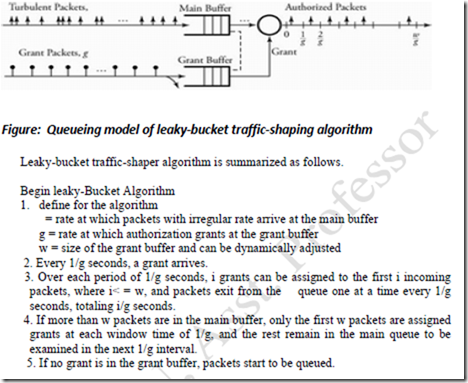

Figure : Traffic shaping to regulate any incoming turbulent traffic Most popular traffic shaping algorithms are leaky bucket and token bucket

Leaky Bucket Traffic Shaping

· The algorithm is used to control the rate at which data is injected into a network, smoothing out "burstiness" in the data rate.

· A leaky bucket provides a mechanism by which bursty traffic can be shaped to present a steady stream of traffic to the network, as opposed to traffic with erratic bursts of low-volume and high-volume flows.

· A leaky bucket interface is connected between a packet transmitter and the network

· No matter at which rate the packets enter the traffic shaper, the outflow is regulated as a constant rate.

· When a packet arrives, the interface decides whether that packet should be queued or discarded, depending on the capacity of the buffer.

· Incoming packets are discarded once the bucket becomes full.

· This method directly restricts the maximum size of burst coming into the system.

· Packets are transmitted as either fixed-size packets or variable-size packets.

· Leaky bucket scheme is modeled by two main buffers, one buffer forms a queue of incoming packets and other one receives authorizations.

Token bucket shaping

· The token bucket is a control mechanism that dictates when traffic can be transmitted, based on the presence of tokens in the bucket--an abstract container that holds aggregate network traffic to be transmitted.

· The bucket contains tokens, each of which can represent a unit of bytes or a single packet of predetermined size.

· Tokens in the bucket are removed ("cashed in") for the ability to send a packet.

· The network administrator specifies how many tokens are needed to transmit how many bytes.

· When tokens are present, a flow is allowed to transmit traffic.

· If there are no tokens in the bucket, a flow cannot transmit its packets.

· Therefore, a flow can transmit traffic up to its peak burst rate if there are adequate tokens in the bucket and if the burst threshold is configured appropriately.

2. Admission Control

· It is a network function that computes the resource(bandwidth and buffers) requirements of new flow and determines whether the resources along the path to be followed are available.

· Before sending packet the source must obtain permission from admission control.

· Admission control decides whether to accept the flow or not.

· Flow is accepted if the QoS of new flow does not violate QoS of existing flows

· QoS can be expressed in terms of maximum delay, loss probability, delay variance, or other performance measures.

· QoS requirements:

o Peak, Average., Minimum Bit rate

o Maximum burst size

o Delay, Loss requirement

· Network computes resources needed like “Effective” bandwidth

· If flow accepted, network allocates resources to ensure QoS delivered as long as source conforms to contract

3. Resource reservation protocol

· The Resource ReSerVation Protocol (RSVP) is a Transport layer protocol designed to reserve resources across a network for an integrated services Internet.

· RSVP does not transport application data but is rather an Internet control protocol, like ICMP, IGMP, or routing protocols.

· RSVP provides receiver-initiated setup of resource reservations for multicast or unicast data flows with scaling and robustness.

· RSVP can be used by either hosts or routers to request or deliver specific levels of quality of service (QoS) for application data streams or flows.

· RSVP defines how applications place reservations and how they can relinquish the reserved resources once the need for them has ended.

· RSVP operation will generally result in resources being reserved in each node along a path.

· RSVP is not itself a routing protocol and was designed to interoperate with current and future routing protocols.

· RSVP by itself is rarely deployed in telecommunications networks today, but the traffic engineering extension of RSVP, or RSVP-TE, is becoming more widely accepted nowadays in many QoS-oriented networks.

4. Packet scheduling

· Packet scheduling refers to the decision process used to choose which packets should be serviced or dropped.

· Buffer management refers to any particular discipline used to regulate the occupancy of a particular queue.

· At present, support is included for drop-tail (FIFO) queueing, RED buffer management, CBQ (including a priority and round-robin scheduler), and variants of Fair Queueing including, Fair Queueing (FQ) and Deficit Round-Robin (DRR).

The different strategies for Queue scheduling are:-

1. FIFO QUEUEING

2. PRIORITY QUEUEING

3. FAIR QUEUEING

4. WEIGHTED FAIR QUEUEING

1) FIFO QUEUEING

• Transmission Discipline: First-In, First-Out

• All packets are transmitted in order of their arrival.

• Buffering Discipline:- Discard arriving packets if buffer is full

• Cannot provide differential QoS to different packet flows

• Difficult to determine performance delivered

• Finite buffer determines a maximum possible delay

• Buffer size determines loss probability, but depends on arrival & packet length statistics.

FIFO Queuing with Discard Priority

FIFO queue management can be modified to provide different characteristics of packet-loss performance to different classes of traffic.

• The above Figure 7.42 (b) shows an example with two classes of traffic.

• When number of packets in a buffer reaches a certain threshold, arrivals of lower access priority (class 2) are not allowed into the system.

• Arrivals of higher access priority (class 1) are allowed as long as the buffer is not full.

2) Head of Line (HOL) Priority Queuing

• Second queue scheduling approach which defines number of priority classes.

• A separate buffer is maintained for each priority class.

• High priority queue serviced until empty and high priority queue has lower waiting time

• Buffers can be dimensioned for different loss probabilities

• Surge in high priority queue can cause low priority queue to starve for resources.

• It provides differential QoS.

• High-priority classes can hog all of the bandwidth & starve lower priority classes

• Need to provide some isolation between classes

Sorting packets according to priority tags/Earliest due Date Scheduling

•Third approach to queue scheduling

•Sorting packets according to priority tags which reflect the urgency of packet needs to be transmitted.

• Add Priority tag to packet, which consists of priority class followed by the arrival time of a packet.

• Sort the packet in queue according to tag and serve according to HOL priority system

• Queue in order of “due date”.

• The packets which requires low delay get earlier due date and packets without delay get indefinite or very long due dates

3) Fair Queueing / Generalized Processor Sharing

· Fair queueing provides equal access to transmission bandwidth.

· Each user flow has its own logical queue which prevents hogging and allows differential loss probabilities

· C bits/sec is allocated equally among non-empty queues.

· The transmission rate = C / n bits/second, where n is the total number of flows in the system and C is the transmission bandwidth.

· Fairness: It protects behaving sources from misbehaving sources.

· Aggregation:

o Per-flow buffers protect flows from misbehaving flows

o Full aggregation provides no protection

o Aggregation into classes provided intermediate protection

· Drop priorities:

o Drop packets from buffer according to priorities

o Maximizes network utilization & application QoS

o Examples: layered video, policing at network edge.

The above figure 7.46 illustrates the differences between ideal or fluid flow and packet-by-packet fair queueing for packets of equal length.

• Idealized system assumes fluid flow from queues, where the transmission bandwidth is divided equally among all non-empty buffers.

• The figure assumes buffer1 and buffer 2 has single L-bit packet to transmit at t=0 and no subsequent packet arrive.

• Assuming capacity of C=L bits/second=1 packet/second.

• Fluid-flow system transmits each packet at a rate of ½ and completes the transmission of both packets exactly at time=2 seconds.

• Packet-by-packet fair queueing system transmits the packet from buffer 1 first and then transmits from buffer 2, so the packet completion times are 1 and 2 seconds.

The above figure 7.48 illustrates the differences between ideal or fluid flow and packet-by-packet fair queueing for packets of variable length.

4) Weighted Fair Queuing (WFQ)

• WFQ addresses the situation in which different users have different requirements.

• Each user flow has its own buffer and each user flow also has weight.

• Here weight determines its relative bandwidth share.

• If buffer 1 has weight 1 and buffer 2 has weight 3, then when both buffers are nonempty, buffer 1 will receive 1/(1+3)=1/4 of the bandwidth and buffer 2 will receive ¾ of the bandwidth.

In the above figure,

• In Fluid-flow system, the transmission of each packet from buffer 2 is completed at time t=4/3, and the packet from buffer 1 is completed at t=2 seconds.

• In the above figure buffer1 would receive 1 bit/round and buffer 2 would receive 3 bits/second.

• Packet-by-packet weighted fair queueing calculates its finishing tag as follows F(i,k,t) = max{F(i,k-1,t), R(t)} + P(i,k,t)/wi

• The above figure also shows the completion times for Packet-by-packet weighted fair queueing.

• The finish tag for buffer1 is F(1,1)=R(0)+1/1 =1 and finish tag for buffer 2 is F(2,1) =R(0) + 1/3 =1/3.

• Therefore the packet from buffer 2 is served first and followed by packet from buffer 1.

Deficit round robin scheduler

· Deficit round robin (DRR) is a modified weighted round robin scheduling discipline.

· It can handle packets of variable size without knowing their mean size.

· A maximum packet size number is subtracted from the packet length, and packets that exceed that number are held back until the next visit of the scheduler.

· WRR serves every nonempty queue whereas DRR serves packets at the head of every nonempty queue which deficit counter is greater than the packet's size.

· If it is lower then deficit counter is increased by some given value called quantum.

Deficit counter is decreased by the size of packets being served.

Weighted fair queuing

· Weighted fair queuing (WFQ) is a data packet scheduling technique allowing different scheduling priorities to statistically multiplexed data flows.

· WFQ is a generalization of fair queuing (FQ).

· Both in WFQ and FQ, each data flow has a separate FIFO queue.

· In FQ, with a link data rate of R, at any given time the N active data flows (the ones with non-empty queues) are serviced simultaneously, each at an average data rate of R / N. Since each data flow has its own queue, an ill-behaved flow (who has sent larger packets or more packets per second than the others since it became active) will only punish itself and not other sessions.

· Contrary to FQ, WFQ allows different sessions to have different service shares.

· If N data flows currently are active, with weights w1,w2...wN, data flow number i will achieve an average data rate of

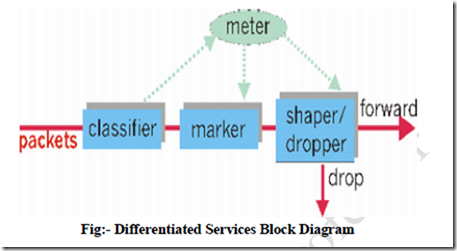

Differentiated Services or DiffServ is a computer networking architecture that specifies a simple, scalable and coarse-grained mechanism for classifying, managing network traffic and providing Quality of Service (QoS) guarantees on modern IP networks.

· DiffServ can, for example, be used to provide low-latency, guaranteed service (GS) to critical network traffic such as voice or video while providing simple best-effort traffic guarantees to non-critical services such as web traffic or file transfers.

· DiffServ operates on the principle of traffic classification, where each data packet is placed into a limited number of traffic classes, rather than differentiating network traffic based on the requirements of an individual flow.

· Each router on the network is configured to differentiate traffic based on its class.

· Each traffic class can be managed differently, ensuring preferential treatment for higher-priority traffic on the network.

· DiffServ simply provides a framework to allow classification and differentiated treatment.

· DiffServ does recommend a standardized set of traffic classes (discussed below) to make interoperability between different networks and different vendors' equipment simpler.

· DiffServ relies on a mechanism to classify and mark packets as belonging to a specific class.

· DiffServ-aware routers implement Per-Hop Behaviors (PHBs), which define the packet forwarding properties associated with a class of traffic.

· Different PHBs may be defined to offer, for example, low-loss, low-latency forwarding properties or best-effort forwarding properties.

· All the traffic flowing through a router that belongs to the same class is referred to as a Behavior Aggregate (BA).

Per-Hop Behavior

· The Per-Hop Behavior (PHB) is indicated by encoding a 6-bit value—called the Differentiated Services Code Point (DSCP)—into the 8-bit Differentiated Services (DS) field of the IP packet header.

· The DS field is the same as the TOS field, and ECN occupies the upper 2 bits.

· In theory, a network could have up to 64 (i.e. 26) different traffic classes using different markings in the DSCP.

· This gives a network operator great flexibility in defining traffic classes.

· In practice, however, most networks use the following commonly-defined Per-Hop Behaviors:

· Default PHB—which is typically best-effort traffic

· Expedited Forwarding (EF) PHB—dedicated to low-loss, low-latency traffic

· Assured Forwarding (AF) PHB— which gives assurance of delivery under conditions

· Class Selector PHBs—which are defined to maintain backward compatibility with the IP Precedence field.

Classification of Resource allocation

Resource allocation can be classified by various approaches,

1. Router based versus Host based

2. Fixed versus Adaptive

3. Window based versus Rate based

Router based versus Host based

· Resource allocation can be classified whether a router or a host set up required resource.

· In router-based scheme, routers have primary responsibility for congestion control.

· A router selectively forwards packets and drops them, if required, to manage the allocation of existing resources.

· A router also sends information to end host on the amount of traffic it can generate and send.

· In host-based scheme, end hosts have primary responsibility for congestion control.

· The end host observes the network conditions and adjusts their behavior, such as throughput, delay and packet losses and adjust rate at which they generate and send packets.

Fixed versus Adaptive

· In fixed reservation scheme, the end host request the resource at the router level before a flow is established

· Each router then allocates enough resource to satisfy the request

· If the request cannot be satisfied at some router, because resources are unavailable then the router rejects the flow.

· In Adaptive reservation scheme, the end host being sending data without first reserving any resources at the router and then adjust their sending rate according to the feedback they receive.

Window based versus Rate based

· In window-based resource allocation, a receiver chooses a window size. This window size is dependent on the buffer space available to the receiver.

· The receiver then sends the window size to the sender.

· In rate based resource allocation, a receiver specifies the maximum rate of bits per second it can handle.

· A sender sends traffic in compliance with the rate advertised by the receiver.

· The reservation based allocation also involves reservation in b/s.

Fairness in Resource Allocation

The effective utilization of resource allocation scheme can be evaluated with two primary metrics,

1. Throughput

2. Delay

· Throughput has to be as large as possible, delay for a flow should normally be minimal

· When the number of packets admitted into the network increases, the throughput tends to improve, but when the number of packets increases, the capacity of links is saturated, and thus the delay also increases.

· A resource allocation scheme must also be fair i.e. each traffic flow through the network receives an equal share of bandwidth.

Resource Allocation in ATM Network

· If we consider ATM network and packet switched network both traffic management and congestion control differ.

· The task of congestion control is difficult when the network is with high speed multiplexing and small size packets.

· Congestion can be avoided by maintaining constant bit rate in ATM networks.

Constant Bit Rate (CBR)

In ATM networks, one of the important requirements is low cell delay with constant bit rate of cell delivery. This is the most important in delay-sensitive applications such as voice communication, cannot produce long delays among their cells.

Cell-delay variations can occur at both source and destination sides.

In ATM networks, certain algorithm is used to maintain the Constant Bit Rate (CBR).

Let Cn be the cell, to produce the n number of delay variations, k be the constant rate at which cells are delivered to users.

The interval between two consecutive arriving cells is 1/k

Let tn be the time at which the nth cell is received. Then the variable delay for nth cell is represented as,

We assume c1 is the first cell and the second cell is represented as,

· Increasing both the network resources and the data rate at the user interface the cell- delay variation can be reduced. Because cells are small and fixed in size, their delay will be lower at the network level.

· To avoid congestion in any network resource allocation has to be controlled.

· The key factors that influence traffic management in ATM networks are latency and cell delay variation.

Resource Management using Virtual Paths

· In ATM networks, the main resource management is to use all virtual paths properly

· Number of Virtual Channel Connections (VCC) are grouped into Virtual Path Connection (VPC).

· It provides equal capacity to all virtual channels within virtual path.

· The traffic management in ATM networks having different parameters are cell-loss ratio, cell-transfer delay and cell-delay variation.

· The performance of virtual channel depends on the performance of virtual paths

· There are two types of approaches to resource allocation for virtual paths.

· In the first approach, the ATM network can set capacity of the virtual paths to meet the peak data rates for all VCCs, within a VPC. However, the number of resources are wasted.

· The second approach, an ATM network can set the capacity of VPC to be greater than or equal to the average capacity of all VCCs.

Connection Admission Control

An ATM network accepts the connection only if it meets the QoS requirements.

Once the network accepts connection, the user and the network enter into the traffic contract.

The traffic contract can be characterized as four parameters

o Peak Cell Rate

o Cell Delay Variation

o Sustainable Cell Rate

o Burst Tolerance

ReplyDeleteBangalore web Zone is a web site design and website development company with considerable knowledge in developing web-site and using powerful digital marketing & enterprise growth strategies for our customers.We’re professionals when it comes to marketing and advertising and technology but more important we’re zealous about using our knowledge to make your brand much better.

web development firm | Website development company